分析项目数据时,从知乎特定问题获取信息非常困难,因为知乎的呈现方法复杂,网上的指导资料要么过时,要么需要付费,实在令人烦恼,接下来要谈谈我遇到的问题。

Web工具尝试

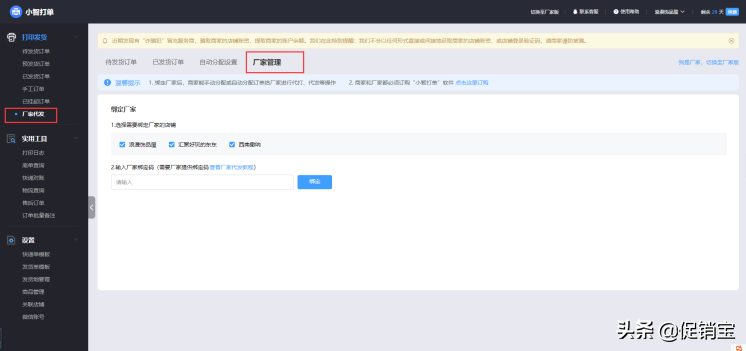

这个网络工具是一款无需编码的简单数据采集器,通过安装附加组件即可操作,在应用市场里能找到。按下F12键开始操作,操作时需要先确定区域再选择内容。它的工作方式是模拟页面滚动到顶部然后进行数据抓取,对于轻量级的采集任务比较适用。不过我要处理两千多条的回复时,可能会遇到无法滚动到顶部或者程序崩溃的问题。但对于数据量少的情况应该没有问题,只需要把任务名称放在特定的位置,同时替换链接中的问题编号即可。

使用某方法再试

{"_id":"name","startUrl":["https://www.zhihu.com/question/xxxxxxxxx/answers/updated"],"selectors":[{"id":"block","parentSelectors":["_root"],"type":"SelectorElementScroll","selector":"div.List-item:nth-of-type(n+2)","multiple":true,"delay":2000,"elementLimit":2100},{"id":"content","parentSelectors":["block"],"type":"SelectorText","selector":"span[itemprop='text']","multiple":true,"regex":""},{"id":"user","parentSelectors":["block"],"type":"SelectorLink","selector":".AuthorInfo-name a","multiple":true,"linkType":"linkFromHref"},{"id":"date","parentSelectors":["block"],"type":"SelectorText",

"selector":".ContentItem-time span",

"multiple":true,"regex":""}]}

这种做法和网页运作方式类似,需要滑动到底部来获取内容。不过,同样会碰到滑动到底部时页面响应缓慢的情况,同时知乎的临时数据存储也会造成数据获取不完整。这种方法对于简单的任务可能还有点用,操作时自行准备相应材料,否则很难绕过登录环,相当费事。

配合soap试验

def scrape1(question_id):

user_agents = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/61.0.3163.100 Safari/537.36',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/71.0.3578.98 Safari/537.36',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.116 Safari/537.36',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/90.0.4430.212 Safari/537.36'

]

url = f'https://www.zhihu.com/question/{question_id}' # 替换question_id

# 创建一个Options对象,并设置headers

options = Options()

options.add_argument("user-agent=" + random.choice(user_agents))

# 传入cookie

cookies = json.load(open('cookie.json', 'r', encoding='utf-8'))

# options.add_argument("--headless")

# 创建WebDriver时传入options参数

driver = webdriver.Chrome(options=options)

driver.get(url)

driver.delete_all_cookies()

for cookie in cookies:

driver.add_cookie(cookie)

time.sleep(2)

driver.refresh()

time.sleep(5) # 等待页面加载完成

# items = []

# question = driver.find_element(By.CSS_SELECTOR, 'div[class="QuestionPage"] meta[itemprop="name"]').get_attribute(

# 'content')

# while True:

# # 滚动到页面底部

# print('scrolling to bottom')

# driver.execute_script("window.scrollTo(0, document.body.scrollHeight);")

# time.sleep(random.randint(5, 8)) # 等待页面加载新内容的时间,根据实际情况进行调整

#

# # 如果找到了页面底部元素就停止加载

# try:

# driver.find_element(By.CSS_SELECTOR, 'button.Button.QuestionAnswers-answerButton')

# print('reached the end')

# break

# except:

# pass

#

![图片[1]-项目中知乎爬数据踩坑记录,好用爬虫工具Web scraper介绍-东山笔记](https://83ch.com/wp-content/themes/zibll/img/thumbnail-lg.svg)

html = driver.page_source

# 解析HTML

soup = BeautifulSoup(html, 'html.parser')

# 获取所有回答的标签

answers = soup.find_all('div', class_='List-item')

df = pd.DataFrame()

contents = []

answer_ids = []

driver.quit()

for answer in answers:

# 获取回答的文本内容

content = answer.find('div', class_='RichContent-inner').get_text()

contents.append(content)

df['answer_id'] = answer_ids

df['content'] = contents

df.to_csv(f'{question_id}.csv', index=False, encoding='utf-8')

这是我最终找到的可行办法,核心在于能够中途暂停然后继续操作,不必等全部完成也能使用。我查阅了相关资料,发现原始代码的基本逻辑是那样的,但并不适合我的使用场景。我的想法是调整代码以获取必要数据,然后利用这些数据来完整提取答案。

模版网页获取

要得到那个模板页面,先打开你想要的那条回复,然后刷新页面去寻找特定的数据包。但是,那个请求网址里的信息总是空白,证明这个方法行不通。可以试试用最开始提供的网址去找到next链接,这样就能获取到相关回复的内容了。

根据信息爬内容

掌握必要信息后便着手搜集资料,每获取一百项就进行一次备份,以防意外发生导致前功尽弃。这样即便过程中遇到障碍,也不会造成重大损失,可以继续之前的工作,同时也能提升工作效率。

#网址模板

template = 'https://www.zhihu.com/api/v4/questions/432119474/answers?include=data%5B*%5D.is_normal%2Cadmin_closed_comment%2Creward_info%2Cis_collapsed%2Cannotation_action%2Cannotation_detail%2Ccollapse_reason%2Cis_sticky%2Ccollapsed_by%2Csuggest_edit%2Ccomment_count%2Ccan_comment%2Ccontent%2Ceditable_content%2Cattachment%2Cvoteup_count%2Creshipment_settings%2Ccomment_permission%2Ccreated_time%2Cupdated_time%2Creview_info%2Crelevant_info%2Cquestion%2Cexcerpt%2Crelationship.is_authorized%2Cis_author%2Cvoting%2Cis_thanked%2Cis_nothelp%2Cis_labeled%2Cis_recognized%2Cpaid_info%2Cpaid_info_content%3Bdata%5B*%5D.mark_infos%5B*%5D.url%3Bdata%5B*%5D.author.follower_count%2Cbadge%5B*%5D.topics%3Bsettings.table_of_content.enabled%3B&offset={offset}&limit=5&sort_by=default&platform=desktop'

for page in range(1, 100):

#对第page页进行访问

url = template.format(offset=page)

resp = requests.get(url, headers=headers)

#解析定位第page页的数据

for info in resp.json()['data']:

author = info['author']

Id = info['id']

text = info['excerpt']

data = {'author': author,

'id': Id,

'text': text}

#存入csv

writer.writerow(data)

#降低爬虫对知乎的访问速度

time.sleep(1)

总结与提醒

这次搜集资料过程困难重重,试过好几种途径。后来大家要是碰到相似状况,可以借鉴我的这些体会,避免走冤枉路。要依据工作多少挑选恰当的门路,否则会消耗大量时间和精力。

大家在搜集信息时,是否碰到过一些怪异状况?若觉得这篇文章有帮助,别忘了点个赞,也转发给其他人。